This document picks up where the MSDN “DXGI flip model” article and YouTube DirectX 12: Presentation Modes In Windows 10 and Presentation Enhancements in Windows 10: An Early Look videos left off. It provides developer guidance on how to maximize performance and efficiency in the presentation stack on modern versions of Windows.

Call to action

If you are still using DXGI_SWAP_EFFECT_DISCARD or DXGI_SWAP_EFFECT_SEQUENTIAL (aka "blt" present model), it's time to stop!

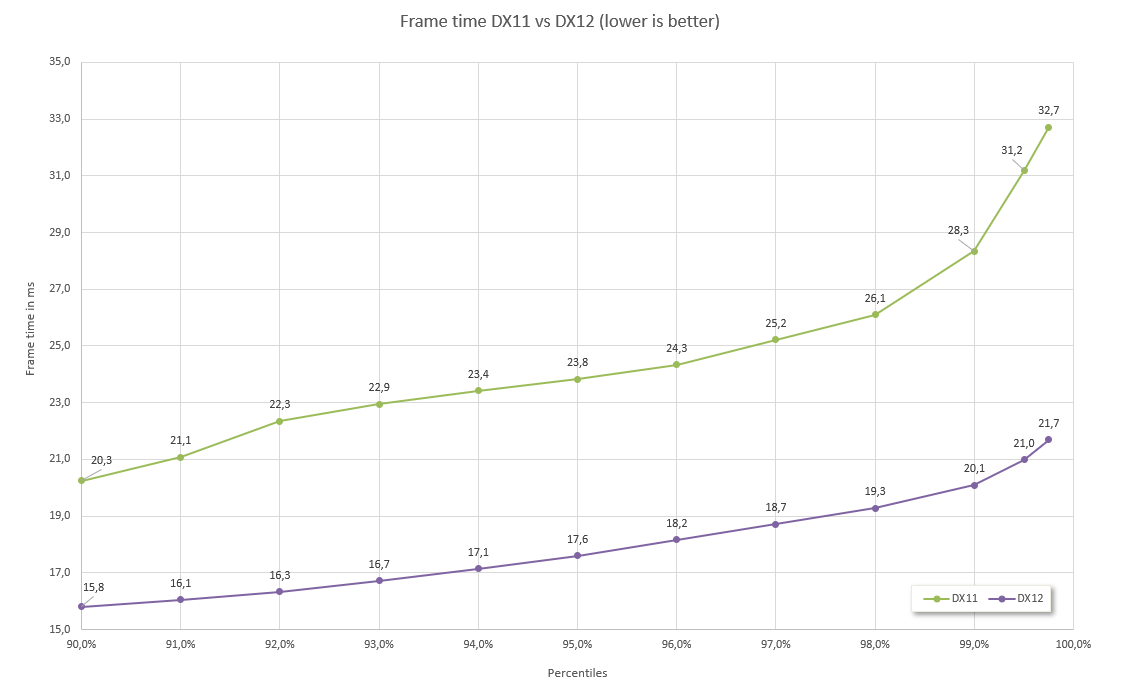

Switching to DXGI_SWAP_EFFECT_FLIP_SEQUENTIAL or DXGI_SWAP_EFFECT_FLIP_DISCARD (aka flip model) will give better performance, lower power usage, and provide a richer set of features.

Flip model presents go as far as making windowed mode effectively equivalent or better when compared to the classic "fullscreen exclusive" mode. In fact, we think it’s high time to reconsider whether your app actually needs a fullscreen exclusive mode, since the benefits of a flip model borderless window include faster Alt-Tab switching and better integration with modern display features.

Why now? Prior to the upcoming Spring Creators Update, blt model presents could result in visible tearing when used on hybrid GPU configurations, often found in high end laptops (see KB 3158621). In the Spring Creators Update, this tearing has been fixed, at the cost of some additional work. If you are doing blt presents at high framerates across hybrid GPUs, especially at high resolutions such as 4k, this additional work may affect overall performance. To maintain best performance on these systems, switch from blt to flip present model. Additionally, consider reducing the resolution of your swapchain, especially if it isn’t the primary point of user interaction (as is often the case with VR preview windows).

A brief history

What is flip model? What is the alternative?

Prior to Windows 7, the only way to present contents from D3D was to "blt" or copy it into a surface which was owned by the window or screen. Beginning with D3D9’s FLIPEX swapeffect, and coming to DXGI through the FLIP_SEQUENTIAL swap effect in Windows 8, we’ve developed a more efficient way to put contents on screen, by sharing it directly with the desktop compositor, with minimal copies. See the original MSDN article for a high level overview of the technology.

This optimization is possible thanks to the DWM: the Desktop Window Manager, which is the compositor that drives the Windows desktop.

When should I use blt model?

There is one piece of functionality that flip model does not provide: the ability to have multiple different APIs producing contents, which all layer together into the same HWND, on a present-by-present basis. An example of this would be using D3D to draw a window background, and then GDI to draw something on top, or using two different graphics APIs, or two swapchains from the same API, to produce alternating frames. If you don’t require HWND-level interop between graphics components, then you don’t need blt model.

There is a second piece of functionality that was not provided in the original flip model design, but is available now, which is the ability to present at an unthrottled framerate. For an application which desires using sync interval 0, we do not recommend switching to flip model unless the IDXGIFactory5::CheckFeatureSupport API is available, and reports support for DXGI_FEATURE_PRESENT_ALLOW_TEARING. This feature is nearly ubiquitous on recent versions of Windows 10 and on modern hardware.

What’s new in flip model?

If you’ve watched the YouTube video linked above, you’ll see talk about "Direct Flip" and "Independent Flip". These are optimizations that are enabled for applications using flip model swapchains. Depending on window and buffer configuration, it is possible to bypass desktop composition entirely, and directly send application frames to the screen, in the same way that exclusive fullscreen does.

These days, these optimizations can engage in one of 3 scenarios, with increasing functionality:

- DirectFlip: Your swapchain buffers match the screen dimensions, and your window client region covers the screen. Instead of using the DWM swapchain to display on the screen, the application swapchain is used instead.

- DirectFlip with panel fitters: Your window client region covers the screen, and your swapchain buffers are within some hardware-dependent scaling factor (e.g. 0.25x to 4x) of the screen. The GPU scanout hardware is used to scale your buffer while sending it to the display.

- DirectFlip with multi-plane overlay (MPO): Your swapchain buffers are within some hardware-dependent scaling factor of your window dimensions. The DWM is able to reserve a dedicated hardware scanout plane for your application, which is then scanned out and potentially stretched, to an alpha-blended sub-region of the screen.

With windowed flip model, the application can query hardware support for different DirectFlip scenarios and implement different types of dynamic scaling via use of IDXGIOutput6:: CheckHardwareCompositionSupport. One caveat to keep in mind is that if panel fitters are utilized, it’s possible for the cursor to suffer stretching side effects, which is indicated via DXGI_HARDWARE_COMPOSITION_SUPPORT_FLAG_CURSOR_STRETCHED.

Once your swapchain has been "DirectFlipped", then the DWM can go to sleep, and only wake up when something changes outside of your application. Your app frames are sent directly to screen, independently, with the same efficiency as fullscreen exclusive. This is "Independent Flip", and can engage in all of the above scenarios. If other desktop contents come on top, the DWM can either seamlessly transition back to composed mode, efficiently "reverse compose" the contents on top of the application before flipping it, or leverage MPO to maintain the independent flip mode.

Check out the PresentMon tool to get insight into which of the above was used.

What else is new in flip model?

In addition to the above improvements, which apply to standard swapchains without anything special, there are several features available for flip model applications to use:

- Decreasing latency using DXGI_SWAP_CHAIN_FLAG_FRAME_LATENCY_WAITABLE_OBJECT. When in Independent Flip mode, you can get down to 1 frame of latency on recent versions of Windows, with graceful fallback to the minimum possible when composed.

- Caveat: there was an issue that gave a minimum of two frames of latency in the Anniversary Update and earlier. See https://www.gamedev.net/forums/topic/686507-windows-10-dx12-low-latency-tearing-free-rendering. This is fixed in the Fall Creator’s Update.

- DXGI_SWAP_EFFECT_FLIP_DISCARD enables a "reverse composition" mode of direct flip, which results in less overall work to display the desktop. The DWM can scribble on the app buffers and send those to screen, instead of performing a full copy into their own swapchain.

- DXGI_SWAP_CHAIN_FLAG_ALLOW_TEARING can enable even lower latency than the waitable object, even in a window on systems with multi-plane overlay support.

- Control over content scaling that happens during window resize, using the DXGI_SCALING property set during swapchain creation.

- Content in HDR formats (R10G10B10A2_UNORM or R16G16B16A16_FLOAT) isn’t clamped unless it’s composed to a SDR desktop.

- Present statistics are available in windowed mode.

- Greater compatibility with UWP app-model and DX12 since these are only compatible with flip-model.

What do I have to do to use flip model?

Flip model swapchains have a few additional requirements on top of blt swapchains:

- The buffer count must be at least 2.

- After Present calls, the back buffer needs to explicitly be re-bound to the D3D11 immediate context before it can be used again.

- After calling SetFullscreenState, the app must call ResizeBuffers before Present.

- MSAA swapchains are not directly supported in flip model, so the app will need to do an MSAA resolve before issuing the Present.

How to choose the right rendering and presentation resolutions

The traditional pattern for apps in the past has been to provide the user with a list of resolutions to choose from when the user selects exclusive fullscreen mode. With the ability of modern displays to seamlessly begin scaling content, consider providing users with the ability to choose a rendering resolution for performance scaling, independent from an output resolution, and even in windowed mode. Furthermore, applications should leverage IDXGIOutput6:: CheckHardwareCompositionSupport to determine if they need to scale the content before presenting it, or if they should let the hardware do the scaling for them.

Your content may need to be migrated from one GPU to another as part of the present or composition operation. This is often true on multi-GPU laptops, or systems with external GPUs plugged in. As these configurations get more common, and as high-resolution displays become more common, the cost of presenting a full resolution swapchain increases. If the target of your swapchain isn’t the primary point of user interaction, as is often the case with VR titles that present a 2D preview of the VR scene into a secondary window, consider using a lower resolution swapchain to minimize the amount of bandwidth that needs to be transferred across different GPUs.

Other considerations

The first time you ask the GPU to write to the swapchain back buffer is the time that the GPU will stall waiting for the buffer to become available. When possible, delay this point as far into the frame as possible.

We think it’s pretty cool – check it out if you ever have a reason to visit the D3D team in person!

We think it’s pretty cool – check it out if you ever have a reason to visit the D3D team in person!